Last year, Google added a new feature to its search engine called “AI Overviews.” This feature uses Gemini, Google’s proprietary large-language model (LLM), to generate responses to users’ queries. Google controls over 90 percent of the search engine market share, and when “AI Overviews” debuted, it immediately became the subject of intense praise and scrutiny from the engine’s massive user base.

LLMs fundamentally change the ways people use the internet to access and comprehend information. In a 2025 research survey, 75.1 percent of participants reported using LLMs like Gemini, ChatGPT (OpenAI) and Copilot (Microsoft) for searching. Gen Z respondents used AI search at an even higher rate of 82 percent.

LLMs usurp the role of search engines in the digital information economy. If left unchecked, this trend will have grave consequences for the health of the internet and the literacy of its users.

In order for AI models to deliver accurate and up-to-date information to users, they require a strong database of human-gathered information.

Much of this information derives from contemporary news organizations and media outlets, which rely on revenue from search to operate. According to a company financial statement, roughly a sixth of The New York Times’ revenue in 2024 came from digital advertising. Media companies that do not offer a subscription rely even more on digital advertising to stay profitable. Vox Media, for example, claims on its website that “advertising continues to make up the bulk of our revenue.”

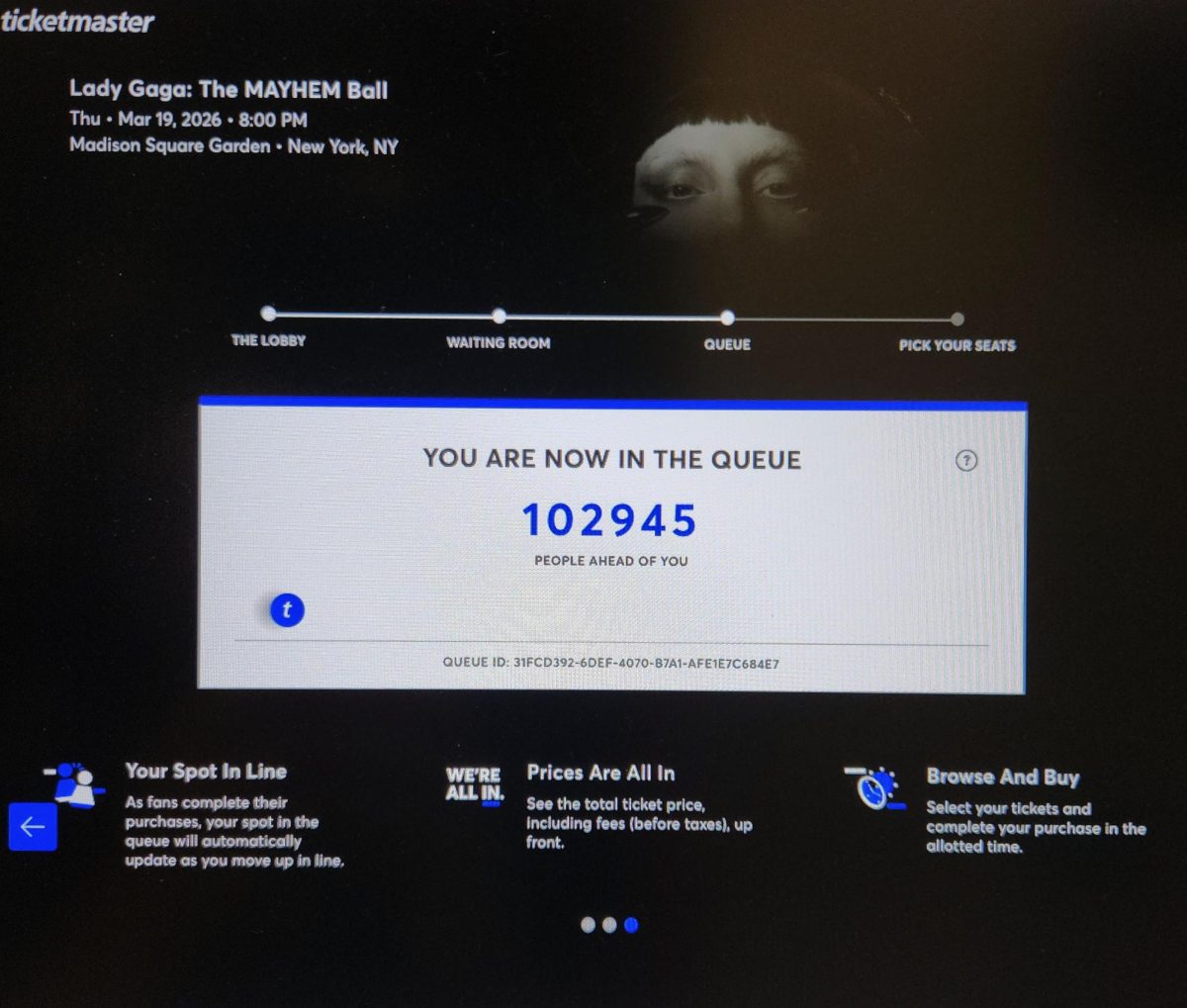

The amount of revenue generated by a digital ad depends largely on the amount of impressions it generates. In subverting ads, LLMs deprive sites of this revenue. Non-dominant media outlets, especially ones specializing in more niche subjects, also rely heavily on search engine optimization (SEO) to find readers and build a following to support their work. A mass abandonment of search engines inhibits independent writers, journalists and creators from building careers and companies.

LLMs do not exclusively rely on the internet to source information, nor do they only draw from sources reliant on SEO and advertising revenue. Many databases, think tanks and research centers are funded by other means. However, news and media outlets update constantly, providing more up-to-date information on current events than other sources. In the private media landscape, advertising is still the best way for these websites to operate without charging users directly, and LLMs undermine this process by completely hiding ads from users.

Additionally, the ways LLMs sort and present information make it difficult for users to critically engage with the information they are receiving. AI algorithms are a type of “black box,” meaning neither users nor programmers fully understand how the model generates its responses. When an LLM serves information to a user, it is not always clear how that information was sourced and processed.

Good critical thinking requires evaluating the sources of information you engage with. Knowing the biases of a news outlet, such as Fox News or MSNBC, enables one to make informed choices for interpreting and using information. Evaluating bias in an LLM is impossible because its methodologies are hidden.

Generative A.I. tools like Midjourney and Sora enable the creation and spread of misinformation at unprecedented levels, making critically sourcing information especially important. In a Pew Research Center survey following the 2024 presidential election, 73 percent of respondents said they had heard “inaccurate news” about the race “at least somewhat often.” Additionally, roughly half of respondents said it was “difficult to determine what is true and what is not.”

To avoid misinformation, one should gather information directly from reliable and trustworthy sources. AI algorithms are far from infallible and are known to hallucinate, fabricating false information to answer search queries. Additionally, when an LLM provides information on a current event, it may leave out important context that could otherwise be gleaned by reading a proper news article.

Our world demands our attention be split in more ways than we can accommodate. Since attention is such a valuable currency, the idea of spending it manually sourcing information may be unappealing, especially when LLMs can conveniently dilute it for quick consumption and deliver it to you instead. But knowledge is power, and if you want to contribute to the creation of a better-informed public alongside a healthier information ecosystem, then you should avoid using AI as your primary search engine. In doing so, you will not only be supporting the existence of a free press, but helping yourself become a better critical thinker and engaged citizen.